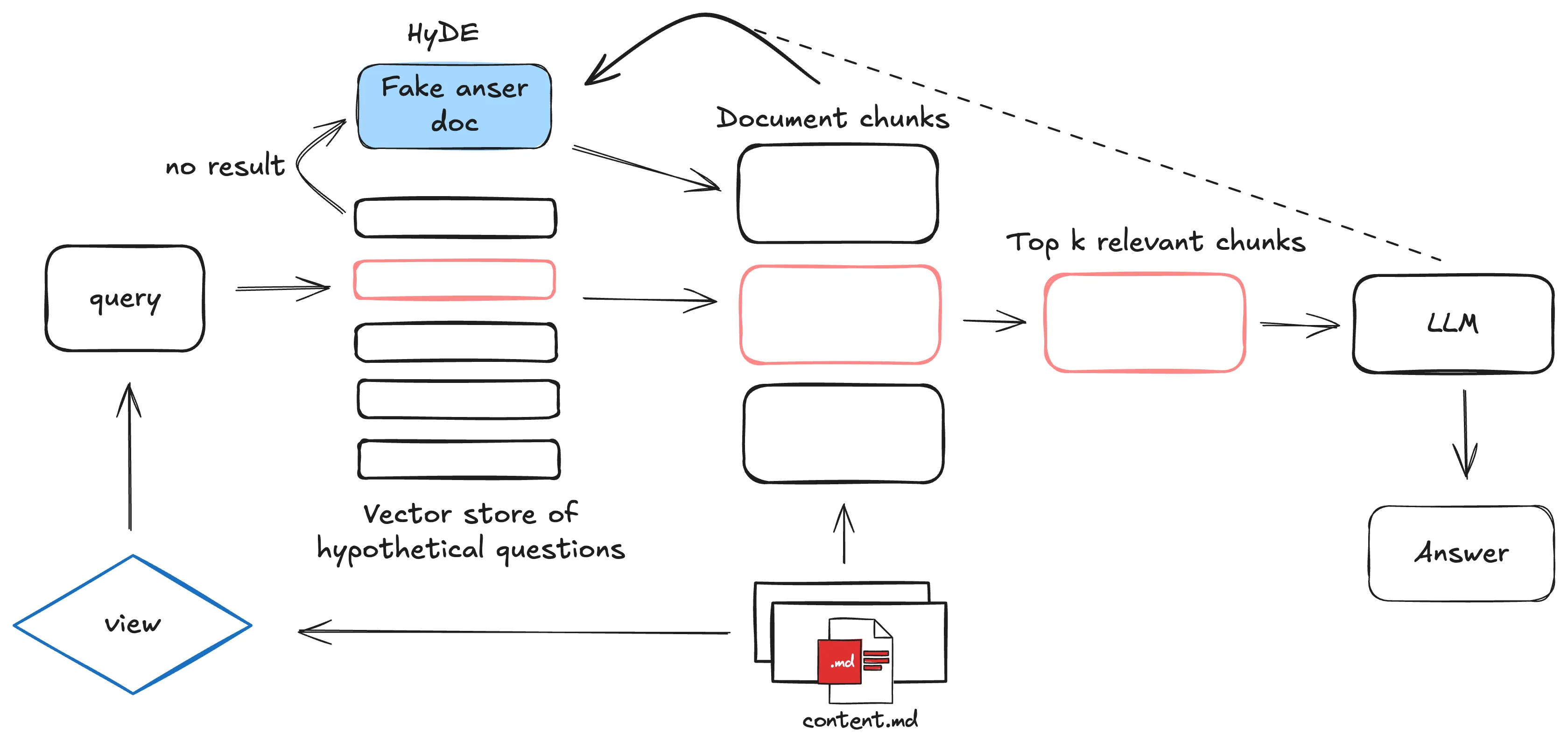

最近 llm 很火,在很多文档网站都添加了基于 rag 的问答,感觉比较有趣,于是自己也尝试了一下,实现起来也不复杂,这里使用的是 deepseek 的 api,你也可以自行替换为其他 llm 模型的接口

知识库构建与嵌入

准备环境

安装如下依赖,建议使用 miniconda 启动虚拟环境

pip install pymilvus openai flask tqdm构建 RAG 知识库

import osfrom glob import globfrom tqdm import tqdmfrom openai import OpenAIfrom pymilvus import MilvusClient, model as milvus_model

os.environ["DEEPSEEK_API_KEY"] = "..............." // 这里请填写自己的 key

# 从 Markdown 文件中提取文本text_lines = []for file_path in glob("../../src/content/**/*.md", recursive=True): with open(file_path, "r", encoding="utf-8") as file: file_text = file.read() text_lines += file_text.split("# ")

# 初始化 DeepSeek 客户端deepseek_client = OpenAI( api_key=os.environ["DEEPSEEK_API_KEY"], base_url="<url id="" type="url" status="parsing" title="" wc="0">https://api.deepseek.com",</url>)

embedding_model = milvus_model.DefaultEmbeddingFunction()

# 初始化 Milvus 客户端milvus_client = MilvusClient(uri="./blog_rag.db")collection_name = "blog_rag_collection"

# 如果集合存在,先删除if milvus_client.has_collection(collection_name): milvus_client.drop_collection(collection_name)

# 创建集合milvus_client.create_collection( collection_name=collection_name, dimension=768, metric_type="IP", consistency_level="Strong",)

# 生成嵌入并插入到 Milvusdata = []doc_embeddings = embedding_model.encode_documents(text_lines)

for i, line in enumerate(tqdm(text_lines, desc="Creating embeddings")): data.append({"id": i, "vector": doc_embeddings[i], "text": line})

milvus_client.insert(collection_name=collection_name, data=data)

print("RAG system built successfully!")代码说明

- 文本提取:从指定目录下的 Markdown 文件中提取文本,并按

#分隔段落。 - 文本嵌入:使用 DeepSeek 的嵌入模型将文本转换为向量。

- Milvus 集合管理:创建或删除名为

blog_rag_collection的集合。 - 数据插入:将嵌入向量和原始文本插入到 Milvus 集合中。

接口实现

这里使用 flask 简单写了一个接口

from flask import Flask, request, jsonifyfrom openai import OpenAIfrom pymilvus import MilvusClient, model as milvus_modelfrom flask_cors import CORS

app = Flask(__name__)

CORS(app, resources={r"/*": {"origins": "*"}})

deepseek_client = OpenAI( api_key=".............", base_url="<url id="" type="url" status="parsing" title="" wc="0">https://api.deepseek.com",</url>)

milvus_client = MilvusClient(uri="./blog_rag.db")collection_name = "blog_rag_collection"

embedding_model = milvus_model.DefaultEmbeddingFunction()

SYSTEM_PROMPT = """Human: You are an AI assistant. You are able to find answers to the questions from the contextual passage snippets provided."""

@app.route("/ask", methods=["POST"])def ask_question(): """ API endpoint to ask a question and get an answer from the RAG system. """ data = request.json question = data.get("question") if not question: return jsonify({"error": "Question is required"}), 400

# 检索相关文本 search_res = milvus_client.search( collection_name=collection_name, data=embedding_model.encode_queries([question]), limit=3, search_params={"metric_type": "IP", "params": {}}, output_fields=["text"], )

context = "\n".join([res["entity"]["text"] for res in search_res[0]])

# 构造用户问题 Prompt USER_PROMPT = f""" Use the following pieces of information enclosed in <context> tags to provide an answer to the question enclosed in <question> tags. <context> {context} </context> <question> {question} </question> """

# 使用 DeepSeek LLM 生成回答 response = deepseek_client.chat.completions.create( model="deepseek-chat", messages=[ {"role": "system", "content": SYSTEM_PROMPT}, {"role": "user", "content": USER_PROMPT}, ], )

return jsonify({"answer": response.choices[0].message.content})

@app.route("/chat_mock", methods=["POST", "GET"])def chat_mock(): """ API endpoint to get raw matched contents from RAG system for debugging. Supports both POST and GET methods. """ if request.method == "POST": data = request.json question = data.get("question") else: # GET method question = request.args.get("question")

if not question: return jsonify({"error": "Question is required"}), 400

# 检索相关文本 search_res = milvus_client.search( collection_name=collection_name, data=embedding_model.encode_queries([question]), limit=3, search_params={"metric_type": "IP", "params": {}}, output_fields=["text"], )

matched_contents = [ { "text": res["entity"]["text"], "score": res["distance"] } for res in search_res[0] ]

return jsonify({ "question": question, "matched_contents": matched_contents })

if __name__ == "__main__": app.run(host="0.0.0.0", port=5500)代码说明

- 检索相关文本:根据用户问题生成嵌入向量,并从 Milvus 中检索最相关的文本片段。

- 构造 Prompt:将检索到的文本片段和用户问题组合成一个完整提示,供 LLM 使用。

- 生成回答:调用 DeepSeek 的 LLM 生成最终回答。

- 调试接口:

/chat_mock接口用于调试,可以查看检索到的相关文本及其分数。